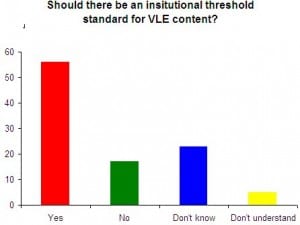

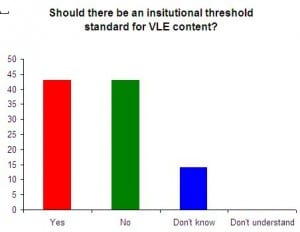

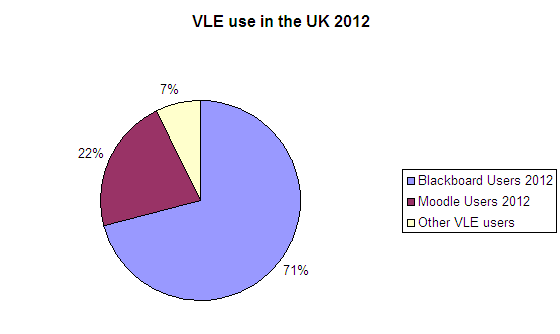

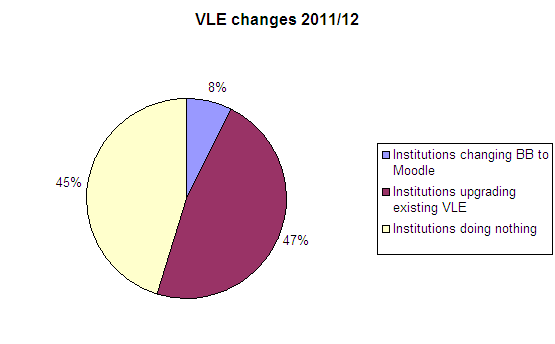

The other day I blogged about the gap between the theory of providing material via an institutional VLE from the perspective of an educational developer and the reality of doing so as I experienced it as an academic. My feeling was that most of us, (academics, that is, though I reiterate, by no means all academics), tend to see it as a content repository, and many students tend to regard it in the same light. Now as it happens, there has been a recent and very interesting debate about the purpose of VLEs on the ALT Jiscmail list. One of the points made there was that the VLE tends to shape our way of thinking about technology, and I think there is something in that. Of course there are many other tools out there besides VLEs, and I was quite impressed with this attempt to incorporate some of them into Blackboard, posted by a contributor to that debate.

http://wishfulthinkinginmedicaleducation.blogspot.co.uk/2010/03/prezi-workaround.html

However, for better or worse, Lincoln and many other institutions are likely to continue with some form of VLE for the foreseeable future, and as I said in the last post, I actually deconstructed a VLE site (Blackboard in this case) which had accumulated about five years worth of material. One of the first challenges in any kind of research, (and I maintain that this is a form of research), is analysis. So, bearing in mind Bourdieu’s warnings about the malleability of classes, and the way the field in which they operate tends to define them, here is a list of the classes of material I found. At first sight it reminded me a little of Borges’s Celestial Emporium of Benevolent Knowledge, insofar as it has very little in common with recognised practice in the field of education.

- Powerpoint slide sets used in lectures that are substitutes for lecture content

- Powerpoint slides designed for use in class discussions

- Word/Pdf Documents designed as handouts

- Word/Pdf Documents which are drafts of articles

- Word/Pdf Documents which contain downloaded articles

- Web links to Open Source journal articles

- Web links to journal articles on publishers sites that have copyright clearance

- Web links to journal articles on publishers sites that do not have copyright clearance

- Web links that are out of date

- Web links that are broken

- Web links that work

- Blackboard wiki pages

- Blog entries

- Contributions to discussion groups

- Audio recordings of lectures

- Video clips

- Video clips that no longer work

- Administrative documents

- Assignment submission instructions

- Those that, at a distance, resemble flies.

(Oh all right, I just took that last one from the Celestial Emporium)

While it looks as though I have emphasised form and function over content here, that’s partly to make the point that form and function tend to dominate technological discourse. I did also give each item up to three subject based keywords, and the new site is in fact organised by topic because I thought that would be of more interest to the students. But I thought the form listing was interesting too because inherent in it are quite a lot of assumptions about what is helpful for student learning. Yes, there’s a variety of forms, but is the same content available in each form? (No, of course not. Though it should be, if only to promote accessibility.)

Form is important in technology. Not everyone can access Word 2010 documents for example, and certainly not everyone has access to broadband sufficient to download video clips. What does the existence of broken, out of date and copyright infringing material, (which, let me reiterate, has all now been removed,) tell us about our attitude to providing this material? This is one site in one department in one University, but I’ll bet it’s not atypical. What I would really like to do is a set of multiple case studies of sites in different institutions and different disciplines. The purpose of doing so, as with any case study, is not to generalise, but to learn from what other people are doing and improve practice. Yes, sometimes that will involve being critical (constructively) of practice, but case studies can just as easily uncover excellent and otherwise hidden practice. While the last couple of posts may sound as though I’m very critical of the site as it was previously conceived, I do think it made a lot of good and useful material available to students. (They just couldn’t find it!).

As I say I think it would be really useful to do some research into this on a wider basis, but there’s an obvious methodological challenge. Since I don’t have access to sites anywhere other than Lincoln, if I ask for participants, there’s an obvious risk of only being given access to sites that participants want me to see. On the other hand extreme cases can be very informative in qualitative research. That’s a discussion for the research proposal though. On the basis of this case, I think though that there is an argument to be made that it is too easy for function to follow form, and for both of them to overshadow content in VLEs, and perhaps in e-learning generally.

You must be logged in to post a comment.